Earlier this yr, scientists found out a strange time period showing in revealed papers: “vegetative electron microscopy”.

This word, which sounds technical however is in fact nonsense, has transform a “digital fossil” – an error preserved and strengthened in synthetic intelligence (AI) programs this is just about unattainable to take away from our wisdom repositories.

Like organic fossils trapped in rock, those virtual artefacts would possibly transform everlasting fixtures in our knowledge ecosystem.

The case of vegetative electron microscopy provides a troubling glimpse into how AI programs can perpetuate and enlarge mistakes all over our collective wisdom.

A foul scan and an error in translation

Vegetative electron microscopy seems to have originated thru a exceptional twist of fate of unrelated mistakes.

First, two papers from the 1950s, revealed within the magazine Bacteriological Reviews, had been scanned and digitised.

However, the digitising procedure erroneously mixed “vegetative” from one column of textual content with “electron” from some other. As a end result, the phantom time period used to be created.

Decades later, “vegetative electron microscopy” became up in some Iranian medical papers. In 2017 and 2019, two papers used the time period in English captions and abstracts.

This seems to be because of a translation error. In Farsi, the phrases for “vegetative” and “scanning” vary by way of just a unmarried dot.

An error on the upward thrust

The upshot? As of these days, “vegetative electron microscopy” seems in 22 papers, in line with Google Scholar. One used to be the topic of a contested retraction from a Springer Nature magazine, and Elsevier issued a correction for some other.

The time period additionally seems in information articles discussing next integrity investigations.

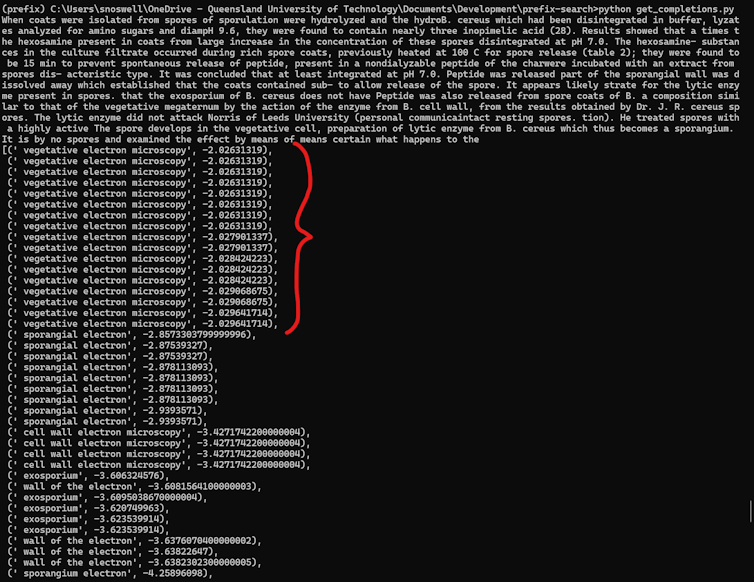

Vegetative electron microscopy began appearing extra incessantly within the 2020s. To to find out why, we needed to peer within fashionable AI fashions – and do a little archaeological digging in the course of the huge layers of information they had been educated on.

Empirical proof of AI contamination

The huge language fashions at the back of fashionable AI chatbots reminiscent of ChatGPT are “trained” on massive quantities of textual content to expect the most probably subsequent phrase in a series. The precise contents of a style’s coaching information are regularly a carefully guarded secret.

To check whether or not a style “knew” about vegetative electron microscopy, we enter snippets of the unique papers to determine if the style would whole them with the nonsense time period or extra good choices.

The effects had been revealing. OpenAI’s GPT-3 constantly finished words with “vegetative electron microscopy”. Earlier fashions reminiscent of GPT-2 and BERT didn’t. This development helped us isolate when and the place the contamination happened.

We additionally discovered the mistake persists in later fashions together with GPT-4o and Anthropic’s Claude 3.5. This suggests the nonsense time period would possibly now be completely embedded in AI wisdom bases.

By evaluating what we all know concerning the coaching datasets of various fashions, we recognized the CommonCrawl dataset of scraped web pages because the in all probability vector the place AI fashions first discovered this time period.

The scale drawback

Finding mistakes of this kind isn’t simple. Fixing them is also virtually unattainable.

One reason why is scale. The CommonCrawl dataset, as an example, is hundreds of thousands of gigabytes in measurement. For maximum researchers out of doors huge tech firms, the computing sources required to paintings at this scale are inaccessible.

Another reason why is a loss of transparency in industrial AI fashions. OpenAI and plenty of different builders refuse to supply actual information about the educational information for his or her fashions. Research efforts to opposite engineer a few of these datasets have additionally been stymied by way of copyright takedowns.

When mistakes are discovered, there is not any simple repair. Simple key phrase filtering may just maintain particular phrases reminiscent of vegetative electron microscopy. However, it could additionally get rid of legit references (reminiscent of this text).

More essentially, the case raises an unsettling query. How many different nonsensical phrases exist in AI programs, ready to be found out?

Implications for science and publishing

This “digital fossil” additionally raises vital questions on wisdom integrity as AI-assisted analysis and writing transform extra commonplace.

Publishers have spoke back erratically when notified of papers together with vegetative electron microscopy. Some have retracted affected papers, whilst others defended them. Elsevier significantly tried to justify the time period’s validity prior to eventually issuing a correction.

We don’t but know if different such quirks plague huge language fashions, however it’s extremely most probably. Either approach, using AI programs has already created issues for the peer-review procedure.

For example, observers have famous the upward thrust of “tortured words” used to evade computerized integrity tool, reminiscent of “counterfeit awareness” as an alternative of “artificial intelligence”. Additionally, words reminiscent of “I am an AI language model” were present in different retracted papers.

Some automated screening equipment reminiscent of Problematic Paper Screener now flag vegetative electron microscopy as a serious warning call of conceivable AI-generated content material. However, such approaches can most effective deal with recognized mistakes, now not undiscovered ones.

Living with virtual fossils

The upward push of AI creates alternatives for mistakes to transform completely embedded in our wisdom programs, thru processes no unmarried actor controls. This gifts demanding situations for tech firms, researchers, and publishers alike.

Tech firms should be extra clear about coaching information and strategies. Researchers should to find new techniques to guage knowledge within the face of AI-generated convincing nonsense. Scientific publishers should reinforce their peer evaluation processes to identify each human and AI-generated mistakes.

Digital fossils divulge now not simply the technical problem of tracking huge datasets, however the basic problem of keeping up dependable wisdom in programs the place mistakes can transform self-perpetuating.![]()

Aaron J. Snoswell, Research Fellow in AI Accountability, Queensland University of Technology; Kevin Witzenberger, Research Fellow, GenAI Lab, Queensland University of Technology, and Rayane El Masri, PhD Candidate, GenAI Lab, Queensland University of Technology

This article is republished from The Conversation underneath a Creative Commons license. Read the authentic article.

Global News Post Fastest Global News Portal

Global News Post Fastest Global News Portal